本記事では、Nougatと呼ばれる機械学習手法を用いて、PDFで保存された科学文書を文字認識(OCR)する方法をご紹介します。

Nougat

概要

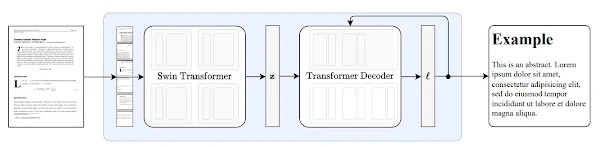

Nougatは、PDF形式で保存された科学文書をマークアップ言語に処理するための光学式文字認識(OCR)技術です。

Neural Optical Understanding for Academic Document(Nougat)は、Visual Transformerモデルをベースとし、PDF形式で保存され数式の意味情報が失われた科学文書を、マークアップ言語に変換しています。

|

| 出典: Nougat: Neural Optical Understanding for Academic Documents |

詳細はこちらの論文をご参照ください。

本記事では上記手法を用いて、Arxiv上の論文をOCRしていきます。

デモ(Colaboratory)

それでは、実際に動かしながら科学文書をOCRしていきます。

ソースコードは本記事にも記載していますが、下記のGitHubでも取得可能です。

GitHub - Colaboratory demo

また、下記から直接Google Colaboratoryで開くこともできます。

なお、このデモはPythonで実装しています。

Pythonの実装に不安がある方、Pythonを使った機械学習について詳しく勉強したい方は、以下の書籍やオンライン講座などがおすすめです。

おすすめの書籍

[初心者向け] Pythonで機械学習を始めるまでに読んだおすすめ書籍一覧

本記事では、現役機械学習エンジニアとして働く筆者が実際に読んだ書籍の中でおすすめの書籍をレベル別に紹介しています。

おすすめのオンライン講座

[初心者向け] 機械学習がゼロから分かるおすすめオンライン講座

本記事では、機械学習エンジニアとして働く筆者が、AI・機械学習をまったく知らない方でも取り組みやすいおすすめのオンライン講座をご紹介しています。

環境セットアップ

それではセットアップしていきます。 Colaboratoryを開いたら下記を設定しGPUを使用するようにしてください。

環境セットアップはNougatをインストールするのみです。

!pip install 'git+https://github.com/facebookresearch/nougat@a017de1f5501fbeb6cd8b7c302f9a075b2b4bee2'

以上で環境セットアップは完了です。

PDFのセットアップ

ここでは、ArxivからPDF保存された科学文書をダウンロードしていきます。

wgetコマンドでNougatの論文をArxivからダウンロードします。

!wget \ --user-agent="Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.103 Safari/537.36"\ https://arxiv.org/pdf/2308.13418.pdf \ -O ./arxiv_nougat.pdf

OCR

それでは、論文をOCRしてきます。

nougatコマンドでOCRを実行します。オプションの説明はこちらをご参照下さい。

初回はモデルのダウンロードを含めて1分半ほどで完了します。

%%time !nougat ./arxiv_nougat.pdf \ -o ./result \ -m 0.1.0-small \ -p 1,8-10

OCR結果は以下の通りです。

通常の文字の精度もさることながら、表なども表現されています。

import pprint

with open('./result/arxiv_nougat.mmd') as f:

s = f.read()

pprint.pprint(s)

('# Nougat: Neural Optical Understanding for Academic Documents\n'

'\n'

'Lukas Blecher\n'

'\n'

'Correspondence to: lblecher@meta.com\n'

'\n'

'Guillem Cucurull\n'

'\n'

'Thomas Scialom\n'

'\n'

'Robert Stojnic\n'

'\n'

'Meta AI\n'

'\n'

'The paper reports 8.1M papers but the authors recently updated the numbers '

'on the GitHub page https://github.com/allenai/s2orc\n'

'\n'

'###### Abstract\n'

'\n'

'Scientific knowledge is predominantly stored in books and scientific '

'journals, often in the form of PDFs. However, the PDF format leads to a loss '

'of semantic information, particularly for mathematical expressions. We '

'propose Nougat (**N**eural **O**ptical **U**nderstanding for **A**cademic '

'**D**cuments), a Visual Transformer model that performs an _Optical '

'Character Recognition_ (OCR) task for processing scientific documents into a '

'markup language, and demonstrate the effectiveness of our model on a new '

'dataset of scientific documents. The proposed approach offers a promising '

'solution to enhance the accessibility of scientific knowledge in the digital '

'age, by bridging the gap between human-readable documents and '

'machine-readable text. We release the models and code to accelerate future '

'work on scientific text recognition.\n'

'\n'

'## 1 Introduction\n'

'\n'

'The majority of scientific knowledge is stored in books or published in '

'scientific journals, most commonly in the Portable Document Format (PDF). '

'Next to HTML, PDFs are the second most prominent data format on the '

'internet, making up 2.4% of common crawl [1]. However, the information '

'stored in these files is very difficult to extract into any other formats. '

'This is especially true for highly specialized documents, such as scientific '

'research papers, where the semantic information of mathematical expressions '

'is lost.\n'

'\n'

'Existing Optical Character Recognition (OCR) engines, such as Tesseract OCR '

'[2], excel at detecting and classifying individual characters and words in '

'an image, but fail to understand the relationship between them due to their '

'line-by-line approach. This means that they treat superscripts and '

'subscripts in the same way as the surrounding text, which is a significant '

'drawback for mathematical expressions. In mathematical notations like '

'fractions, exponents, and matrices, relative positions of characters are '

'crucial.\n'

'\n'

'Converting academic research papers into machine-readable text also enables '

'accessibility and searchability of science as a whole. The information of '

'millions of academic papers can not be fully accessed because they are '

'locked behind an unreadable format. Existing corpora, such as the S2ORC '

'dataset [3], capture the text of 12M2 papers using GROBID [4], but are '

'missing meaningful representations of the mathematical equations.\n'

'\n'

'Footnote 2: The paper reports 8.1M papers but the authors recently updated '

'the numbers on the GitHub page https://github.com/allenai/s2orc\n'

'\n'

'To this end, we introduce Nougat, a transformer based model that can convert '

'images of document pages to formatted markup text.\n'

'\n'

'The primary contributions in this paper are\n'

'\n'

'* Release of a pre-trained model capable of converting a PDF to a '

'lightweight markup language. We release the code and the model on GitHub3 '

'Footnote 3: https://github.com/facebookresearch/nougat\n'

'* We introduce a pipeline to create dataset for pairing PDFs to source code\n'

'* Our method is only dependent on the image of a page, allowing access to '

'scanned papers and books\n'

'\n'

'### Repetitions during inference\n'

'\n'

'We notice that the model degenerates into repeating the same sentence over '

'and over again. The model can not recover from this state by itself. In its '

'simplest form, the last sentence or paragraph is repeated over and over '

'again. We observed this behavior in \\(1.5\\%\\) of pages in the test set, '

'but the frequency increases for out-of-domain documents. Getting stuck in a '

'repetitive loop is a known problem with Transformer-based models, when '

'sampled with greedy decoding [44].\n'

'\n'

'It can also happen that the model alternates between two sentences but '

'sometimes changes some words, so a strict repetition detection will not '

'suffice. Even harder to detect are predictions where the model counts its '

'own repetitions, which sometimes happens in the references section.\n'

'\n'

'In general we notice this kind behavior after a mistake by the model. The '

'model is not able to recover from the collapse.\n'

'\n'

'Anti-repetition augmentationBecause of that we introduce a random '

'perturbation during training. This helps the model to learn how to handle a '

'wrongly predicted token. For each training example, there is a fixed '

'probability that a random token will be replaced by any other randomly '

'chosen token. This process continues until the newly sampled number is '

'greater than a specified threshold (in this case, 10%). We did not observe a '

'decrease in performance with this approach, but we did notice a significant '

'reduction in repetitions. Particularly for out-of-domain documents, where we '

'saw a 32% decline in failed page conversions.\n'

'\n'

'Repetition detectionSince we are generating a maximum of \\(4096\\) tokens '

'the model will stop at some point, however it is very inefficient and '

'resource intensive to wait for a "end of sentence" token, when none will '

'come. To detect the repetition during inference time we look at the largest '

'logit value \\(\\ell_{i}=\\max\\boldsymbol{\\ell}_{i}\\) of the ith token. '

'We found that the logits after a collapse can be separated using the '

'following heuristic. First calculate the variance of the logits for a '

'sliding window of size \\(B=15\\)\n'

'\n'

'\\[\\mathrm{VarWin}_{B}[\\boldsymbol{\\ell}](x)=\\frac{1}{B}\\sum_{i=x}^{x+B}\\left( '

'\\ell_{i}-\\frac{1}{B}\\sum_{j=x}^{x+B}\\ell_{j}\\right)^{2}.\\]\n'

'\n'

'Figure 6: Examples for repetition detection on logits. Top: Sample with '

'repetition, Bottom: Sample without repetition. Left: Highest logit score for '

'each token in the sequence \\(\\ell(x)\\), Center: Sliding window variance '

'of the logits \\(\\mathrm{VarWin}_{B}[\\ell](x)\\), Right: Variance of '

'variance from the position to the end '

'\\(\\mathrm{VarEnd}_{B}[\\ell](x)\\)Here \\(\\ell\\) is the signal of logits '

'and \\(x\\) the index. Using this new signal we compute variances again but '

'this time from the point \\(x\\) to the end of the sequence\n'

'\n'

'\\[\\mathrm{VarEnd}_{B}[\\boldsymbol{\\ell}](x)=\\frac{1}{S-x}\\sum_{i=x}^{S}\\left( '

'\\mathrm{VarWin}_{B}[\\boldsymbol{\\ell}](i)-\\frac{1}{S-x}\\sum_{j=x}^{S}\\mathrm{ '

'VarWin}_{B}[\\boldsymbol{\\ell}](i)\\right)^{2}.\\]\n'

'\n'

'If this signal drops below a certain threshold (we choose 6.75) and stays '

'below for the remainder of the sequence, we classify the sequence to have '

'repetitions.\n'

'\n'

'During inference time, it is obviously not possible to compute the to the '

'end of the sequence if our goal is to stop generation at an earlier point in '

'time. So here we work with a subset of the last 200 tokens and a half the '

'threshold. After the generation is finished, the procedure as described '

'above is repeated for the full sequence.\n'

'\n'

'### Limitations & Future work\n'

'\n'

'**Utility** The utility of the model is limited by a number of factors. '

'First, the problem with repetitions outlined in section 5.4. The model is '

'trained on research papers, which means it works particularly well on '

'documents with a similar structure. However, it can still accurately convert '

'other types of documents.\n'

'\n'

'Nearly every dataset sample is in English. Initial tests on a small sample '

"suggest that the model's performance with other Latin-based languages is "

'satisfactory, although any special characters from these languages will be '

'replaced with the closest equivalent from the Latin alphabet. Non-Latin '

'script languages result in instant repetitions.\n'

'\n'

'**Generation Speed** On a machine with a NVIDIA A10G graphics card with '

'24GB VRAM we can process 6 pages in parallel. The generation speed depends '

'heavily on the amount of text on any given page. With an average number of '

'tokens of \\(\\approx 1400\\) we get an mean generation time of 19.5s per '

'batch for the base model without any inference optimization. Compared to '

'classical approaches (GROBID 10.6 PDF/s [4]) this is very slow, but it is '

'not limited to digital-born PDFs and can correctly parse mathematical '

'expressions.\n'

'\n'

'**Future work** The model is trained on one page at a time without '

'knowledge about other pages in the document. This results in inconsistencies '

'across the document. Most notably in the bibliography where the model was '

'trained on different styles or section titles where sometimes numbers are '

'skipped or hallucinated. Though handling each page separately significantly '

'improves parallelization and scalability, it may diminish the quality of the '

'merged document text.\n'

'\n'

'The primary challenge to solve is the tendency for the model to collapse '

'into a repeating loop, which is left for future work.\n'

'\n'

'## 6 Conclusion\n'

'\n'

'In this work, we present Nougat, an end-to-end trainable encoder-decoder '

'transformer based model for converting document pages to markup. We apply '

'recent advances in visual document understanding to a novel OCR task. '

'Distinct from related approaches, our method does not rely on OCR or '

'embedded text representations, instead relying solely on the rasterized '

'document page. Moreover, we have illustrated an automatic and unsupervised '

'dataset generation process that we used to successfully train the model for '

'scientific document to markup conversion. Overall, our approach has shown '

'great potential for not only extracting text from digital-born PDFs but also '

'for converting scanned papers and textbooks. We hope this work can be a '

'starting point for future research in related domains.\n'

'\n'

'All the code for model evaluation, training and dataset generation can be '

'accessed at https://github.com/facebookresearch/nougat.\n'

'\n'

'## 7 Acknowledgments\n'

'\n'

'Thanks to Ross Taylor, Marcin Kardas, Iliyan Zarov, Kevin Stone, Jian Xiang '

'Kuan, Andrew Poulton and Hugo Touvron for their valuable discussions and '

'feedback.\n'

'\n'

'Thanks to Faisal Azhar for the support throughout the project.\n'

'\n'

'## References\n'

'\n'

'* [1] Sebastian Spiegler. Statistics of the Common Crawl Corpus 2012, June '

'2013. URL '

'https://docs.google.com/file/d/1_9698uglerxB9nAglynHkEgU-iZNm1TvVGuCW7245-WGvZq47teNpb_uL5N9.\n'

'\n'

' * Smith [2007] R. Smith. An Overview of the Tesseract OCR Engine. In _Ninth '

'International Conference on Document Analysis and Recognition (ICDAR 2007) '

'Vol 2_, pages 629-633, Curitiba, Parana, Brazil, September 2007. IEEE. ISBN '

'978-0-7695-2822-9. doi: 10.1109/ICDAR.2007.4376991. URL '

'http://ieeexplore.ieee.org/document/4376991/. ISSN: 1520-5363.\n'

'* Lo et al. [2020] Kyle Lo, Lucy Lu Wang, Mark Neumann, Rodney Kinney, and '

'Daniel Weld. S2ORC: The Semantic Scholar Open Research Corpus. In '

'_Proceedings of the 58th Annual Meeting of the Association for Computational '

'Linguistics_, pages 4969-4983, Online, July 2020. Association for '

'Computational Linguistics. doi: 10.18653/v1/2020.acl-main.447. URL '

'https://aclanthology.org/2020.acl-main.447.\n'

'* Lopez [2023] Patrice Lopez. GROBID, February 2023. URL '

'https://github.com/kermitt2/grobid. original-date: 2012-09-13T15:48:54Z.\n'

'* Moysset et al. [2017] Bastien Moysset, Christopher Kermorvant, and '

'Christian Wolf. Full-Page Text Recognition: Learning Where to Start and When '

'to Stop, April 2017. URL http://arxiv.org/abs/1704.08628. arXiv:1704.08628 '

'[cs].\n'

'* Bautista and Atienza [2022] Darwin Bautista and Rowei Atienza. Scene Text '

'Recognition with Permuted Autoregressive Sequence Models, July 2022. URL '

'http://arxiv.org/abs/2207.06966. arXiv:2207.06966 [cs] version: 1.\n'

'* Li et al. [2022] Minghao Li, Tengchao Lv, Jingye Chen, Lei Cui, Yijuan Lu, '

'Dinei Florencio, Cha Zhang, Zhoujun Li, and Furu Wei. TrOCR: '

'Transformer-based Optical Character Recognition with Pre-trained Models, '

'September 2022. URL http://arxiv.org/abs/2109.10282. arXiv:2109.10282 [cs].\n'

'* Diaz et al. [2021] Daniel Hernandez Diaz, Siyang Qin, Reeve Ingle, '

'Yasuhisa Fujii, and Alessandro Bissacco. Rethinking Text Line Recognition '

'Models, April 2021. URL http://arxiv.org/abs/2104.07787. arXiv:2104.07787 '

'[cs].\n'

'* MacLean and Labahn [2013] Scott MacLean and George Labahn. A new approach '

'for recognizing handwritten mathematics using relational grammars and fuzzy '

'sets. _International Journal on Document Analysis and Recognition (IJDAR)_, '

'16(2):139-163, June 2013. ISSN 1433-2825. doi: 10.1007/s10032-012-0184-x. '

'URL https://doi.org/10.1007/s10032-012-0184-x.\n'

'* Awal et al. [2014] Ahmad-Montaser Awal, Harold Mouchre, and Christian '

'Viard-Gaudin. A global learning approach for an online handwritten '

'mathematical expression recognition system. _Pattern Recognition Letters_, '

'35(C):68-77, January 2014. ISSN 0167-8655.\n'

'* Alvaro et al. [2014] Francisco Alvaro, Joan-Andreu Sanchez, and '

'Jose-Miguel Benedi. Recognition of on-line handwritten mathematical '

'expression using 2D stochastic context-free grammars and hidden Markov '

'models. _Pattern Recognition Letters_, 35:58-67, January 2014. ISSN '

'0167-8655. doi: 10.1016/j.patrec.2012.09.023. URL '

'https://www.sciencedirect.com/science/article/pii/S016786551200308X.\n'

'* Yan et al. [2020] Zuoyu Yan, Xiaode Zhang, Liangcai Gao, Ke Yuan, and Zhi '

'Tang. ConvMath: A Convolutional Sequence Network for Mathematical Expression '

'Recognition, December 2020. URL http://arxiv.org/abs/2012.12619. '

'arXiv:2012.12619 [cs].\n'

'* Deng et al. [2016] Yuntian Deng, Anssi Kanervisto, Jeffrey Ling, and '

'Alexander M. Rush. Image-to-Markup Generation with Coarse-to-Fine Attention, '

'September 2016. URL http://arxiv.org/abs/1609.04938. arXiv:1609.04938 [cs] '

'version: 1.\n'

'* Le and Nakagawa [2017] Anh Duc Le and Masaki Nakagawa. Training an '

'End-to-End System for Handwritten Mathematical Expression Recognition by '

'Generated Patterns. In _2017 14th IAPR International Conference on Document '

'Analysis and Recognition (ICDAR)_, volume 01, pages 1056-1061, November '

'2017. doi: 10.1109/ICDAR.2017.175. ISSN: 2379-2140.\n'

'* Singh [2018] Sumeet S. Singh. Teaching Machines to Code: Neural Markup '

'Generation with Visual Attention, June 2018. URL '

'http://arxiv.org/abs/1802.05415. arXiv:1802.05415 [cs].\n'

'* Zhang et al. [2018] Jianshu Zhang, Jun Du, and Lirong Dai. Multi-Scale '

'Attention with Dense Encoder for Handwritten Mathematical Expression '

'Recognition, January 2018. URL http://arxiv.org/abs/1801.03530. '

'arXiv:1801.03530 [cs].\n'

'* Wang and Liu [2019] Zelun Wang and Jyh-Charn Liu. Translating Math Formula '

'Images to LaTeX Sequences Using Deep Neural Networks with Sequence-level '

'Training, September 2019. URL http://arxiv.org/abs/1908.11415. '

'arXiv:1908.11415 [cs, stat].\n'

'* Zhao et al. [2021] Wenqi Zhao, Liangcai Gao, Zuoyu Yan, Shuai Peng, Lin '

'Du, and Ziyin Zhang. Handwritten Mathematical Expression Recognition with '

'Bidirectionally Trained Transformer, May 2021. URL '

'http://arxiv.org/abs/2105.02412. arXiv:2105.02412 [cs].\n'

'* Mahdavi et al. [2019] Mahshad Mahdavi, Richard Zanibbi, Harold Mouchere, '

'Christian Viard-Gaudin, and Utpal Garain. ICDAR 2019 CROHME + TFD: '

'Competition on Recognition of Handwritten Mathematical Expressions and '

'Typeset Formula Detection. In _2019 International Conference on Document '

'Analysis and Recognition (ICDAR)_, pages 1533-1538, Sydney, Australia, '

'September 2019. IEEE. ISBN 978-1-72813-014-9. doi: 10.1109/ICDAR.2019.00247. '

'URL https://ieeexplore.ieee.org/document/8978036/.')

まとめ

本記事では、Nougatを用いた科学文書のOCRを行いました。

簡単なところでは、PDFをコピペしてGoogle翻訳にペーストしているようなケースで役に立ちそうです。

また本記事では、機械学習を動かすことにフォーカスしてご紹介しました。

もう少し学術的に体系立てて学びたいという方には以下の書籍などがお勧めです。ぜひご一読下さい。

また動かせるだけから理解して応用できるエンジニアの足掛かりに下記のUdemyなどもお勧めです。

参考文献

1. 論文 - Nougat: Neural Optical Understanding for Academic Documents

0 件のコメント :

コメントを投稿